I know, I know. It’s so much easier to discover major flaws in design of things just after you made something wrong, but… here it goes.

So yesterday I’ve been playing around with ISO images of several linux distros on my hard drive. Organize, complete torrent download, etc. For that reason, at some point I moved everything to another directory and removed the main directory I use for them using rm -rf ./Downloads/iso.

Then, I switched to working on silme, reviewing ta-LK localization and trying to install SoftPhone. At some point I tried to figure out why I cannot reach the server and launched another terminal window where I pinged the server with no result. I fixed a few things in my connection and wanted to re-ping the server.

In order to select the right window from the background, I swap my mouse to top-right corner of my screen which enables expose mode in macos or linux and presents all the windows for me to select from. How much easier it can be?

Well, in this case I had to select terminal window, which, comparing to others, is pretty much indistinguishable from each other and of course already forgot I have another terminal window at all. The lightning fast auto combination I did was – select terminal, activate, arrow up, enter.

In some universe this probably caused another me to ping softphone server successfully. But in this particular one (that I created with my choice and dragged you into it with me – no offense) I deleted around 30 gB of iso images before I force-closed the tab (ctrl+c did not work).

The tragedy is not devastating. I can live with that. Sure it’s a lesson for me on what not to do, and when not to assume too much, but the biggest outcome (except of launching a few iso downloads instantly) is that it got me thinking about broken design of rm command in shell.

First of all, there are two different models of me using rm command.

1) When I want to clean up unimportant structure that is either empty or almost empty

2) When I want to remove a big chunk of data that I don’t use anymore

First case is when I’m removing directories that were used in the past and have no value, or, weight. Like, nested directory structure with no files, or just a few, or test directory with some test objects inside. Latter case is when I’m deleting directory with Firefox 2.0 localizations, or application I no longer use.

The current behavior of rm is fundamentally broken (I’m so smart once it failed for me, heh…).

Basic operation would be to use rm OBJECT.

This operation will work on any file no matter of:

- how old it is

- how often did you use it

- when did you use it for the last time

- what apps use it

In other words deleting a file that you used 90 times in last 3 hours, you had on your drive since 2007, was often launched a few times per week because it contains your thesis has exactly the same UI as deleting an empty test.dtd file from data directory.

I call it broken. It ignores all the context that is available to it and assumes a person made a conscious choice, but we all know that using terminal is not all about making conscious choices. It’s about making some actions much faster. Faster equals less conscious.

But what’s even more scary is when we get to directories. Deleting a directory is not that easy. rm dir1 will refuse to work overall just because (comparing to previous case) dir1 is a directory. It’s harder to delete an empty directory than your thesis!

rm offers us a nice argument -d which makes rm threat directories like files, but does it help much? It does with empty directories. So using rm -d object gives you equal UI for deleting your thesis file and an empty dir which is still broken because empty dir is hardly a data loss! The worst can happen when you delete an empty dir is that you cannot remind yourself the name of it. How scary it can be?

Next level of brokenness comes into play when we start thinking of directories with something inside. Today, it rarely happens that we have less than several thousands of directories. It’s getting so complex that OS systems are trying to abstract file system layer and reduce user interaction with the real file system so that people don’t have to spend hours iterating through directories to find the file. It means that very often operation of deleting empty directory does not mean that the directory is fully empty. It means that there’s hardly anything inside. Maybe 5 directories, maybe two small files…

From the computer point of view, it’s not empty. Period. From the user point of view it’s empty or almost empty, who cares. To cover this difference we memorize a new command – rm -rf object. It means what I said – remove this and all the little things inside and don’t bother me.

It’s important to have this method because otherwise you’d have to enter the dir1, delete each empty directory inside and then enter the one subdirectory that contains the only file in this structure, delete it, get back to main directory and remove dir1. rm -rf dir1 solves it.

Unfortunately the solution to remove big chunk of obsolete data is exactly the same. When I want to remove (consciously, after double verification that I don’t need it) 12 GB of data in some directory structure, the easiest thing I can do is to get into parent directory and do rm -rf dir1.

Of course I could use GUI, but almost every GUI tool will try to stat all files inside to show me a nice progress bar which in case of deleting, for example, mozilla-central clone will take forever, so I prefer to use rm -rf dir1.

There are various patterns to recognize the two different kinds of “delete” operation I’m intended to do. One is related to small amounts of data, and usually does not happen just after scanning the directory (I don’t double test such cases), another is more conscious, verified and relates to big amounts of data.

What’s fundamentally broken is that for both, the best and only command I can use is the command that means, more or less, delete whatever is there and don’t bother showing me anything. I actually did not mean it. I could use a confirmation dialog in case of deleting 6 gb of files. Really. It’s just that rm does not provide confirmation. It’s either go or no-go. If I did not provide -rf arguments it will simply refuse to work so I’m adding them not because I want to avoid getting bugged, but because I want to solve my goal!

So one solution might be to switch rm from “not going to happen” mode to “Are you sure you want to delete big amount of your data?”.

Another might be to use context of what I’m trying to delete. Except of a rather rare case of a super important password written in an otherwise empty file in an empty directory structure, usually when something has 10 nested directories and two files with less than 100 bytes of text inside – it’s safe to go. Deleting it means almost the same as deleting one empty directory or file. I should be able to do this without warning.

But when I’m deleting something big and complex I need a way in between rm saying “no – it’s a dir” and “done”. The way that will notice me about the amount of data I’m trying to delete, number of files, number of directories, maybe some other context data in the future, and either ask me for confirmation or give me 3 seconds before proceeding so that I can react and stop it.

This would improve the UI of rm command drastically and save other people data in the future.

Thoughts? Comments?

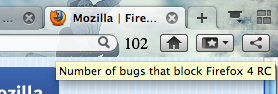

How many blockers do we have until we reach Release Candidate

How many blockers do we have until we reach Release Candidate