After two parts of my vision of local communities, I’d like to make a sudden shift to write a bit about technical aspects of localization. The reason for that is trivial. The third, and last, part of the social story is the most complex one and requires a lot of thinking to put it right.

In the meantime, I work on several aspects of our l10n environment and I’d like to share with you some of the experiences and hopes around it.

Changes, changes

What I wrote in the social vision, part 1 about how the landscape of Mozilla changes and gets more complex, stands true from localization perspective and requires us to adapt in a similar fashion as it requires local communities.

There are three major shifts that I observe, that makes our approach from the past not sufficient.

- User Interfaces become more sophisticated than ever

- Product forms are becaming more diversified and new forms of mashups appear that blend web data, UI and content

- Different products have different “refresh cycles” in which different amount of content/UI is being replaced

Historically, we used DTD and properties for most of our products. The biggest issue with DTD/properties is that those two formats were never meant to be used for localization. We adapted them, exploitet and extended to match some of our needs, but their limitations are pretty obvious.

In respose to those changes, we spent significant amount of time analyzing and rethinking l10n formats to address the needs of Mozilla today and we came up with three distinct forms of data that requires localization and three technologies that we want to use.

L20n

Our major products like Firefox, Thunderbird, Seamonkey or Firefox Mobile, are becoming more sophisticated. We want to show as little UI as possible, each pixel is sacred. If we decide to take that screen piece from the user, we want to use it to maximum. Small buttons, toolbars should be denser – should present and offer more power, be intuitive and allow user to keep full control over the situation.

That exposes a major challenge to localization. Each message must be precise, clear and natural to the user to minimize his confusion. Strings are becoming more complex, with more data elements influencing them. It’s becoming less common to have plain sentences that are static. It’s becoming more common that a string will show in a tooltip, will have little screen (Mobile) and will depend on the state of other elements(number of open tabs, time, gender of the user).

DTD/properties are absolutely not ready to meet those requirements and the more hacks we implement the harder it’ll be to maintain the product and its localizations. Unfortunately other technologies that we considered, like gettext, XLIFF or QT’s TS file format are sharing most of the limitations and are being actively exploited themselves for years now (like gettext’s msgctxt).

Knowing that, we started thinking about how would localization format/technology look like if we can start it today. From the scratch. Knowing what we know. With experience that we have.

We knew that we would like to solve once and for all the problem with astonishing diversity of languages, linguistic rules, forms, variables. We knew we’d like to build a powerful tool set that would allow localizers to maintain their localizations easier, and localize with more context information (like, where the string will be used) than ever. We knew that we want to simplify the cooperation between developers and localizers. And we knew we would love to make it easy to use for everyone.

Axel Hecht came up with a concept of L20n. A format that shifts several paradigms of software localization by enabling algorithmic power outside of the source code. He’s motto is “Make easy things easy, and complex things possible” and that’s exactly what L20n does.

It doesn’t make sense to try to summarize L20n here, I’ll dig deeper in a separate blog post in this series, but what’s important for the sake of this one, is that L20n is supposed to be a new beginning, different than previous generations of localization formats, differently defining the contract between localizer and developer called “an entity”.

It targets software UI elements, should work in any environment (yes, Python, PHP, Perl too) and allow for building natural sentences with full power of each language without leaking this complexity to other locales or developers themselves. I know, sounds bold, but we’re talking about Pike’s idea, right?

Common Pool

While our major products require more complexity, we’re also getting more new products that appear in Mozilla, and very often they require little UI, because they are meant to be non-interruptive. Their localization entities are plain and simple, short and usually have single definition and translation. The land of extensions is the most prominent example of such approach, but more and more of our products have such needs.

Think of an “OK” and “Cancel” button. In 98% of cases, their translations are the same, no matter where they are used. In 98% of cases, their translations are the same among all products and platforms. On top of that there are three exceptions.

First, sometimes the platform uses different translation of the word. Like MacOS may have different translation of “Cancel” than Windows. It’s very easy, systematic difference shared among all products. It does not make any sense to expose this complexity to each localization case and require preparing each separately for this exception.

Second, sometimes an application is specific enough to use a very specific translation of a given word. Maybe it is a medical application? Low level development tool or for lawyers only? In that case, once again, the difference is easy to catch and there’s a very clear layer on which we should make the switch. Exposing it lower in a stack, for each entity use, does not make sense.

Third, it is possible that a very single use of an entity may require different translation for a given language. That’s an extremely rare case, but legitimate. Once again, it doesn’t make sense to leak this complexity onto others.

Common Pool is addressing exactly this type of localizations. Simple, repetitive entities that are shared among many products. In order to address the exceptions, we’re adding a system of overlays which allow a localizer to specify separate translation on one of the given three levels (possibly more).

L20n and Common Pool are complementing each other and we’d like to make sure that they can be used together depending on the potential complexity of the entity.

Rich Content Localization

The third type is very different from the two above. Mozilla today produces a lot of content that goes way beyond product UI and localization formats are terrible when dealing with such rich content – sentences, paragraphs, pages of text mixed with some headers and footers that fill all of our websites.

This content is also diversified, SUMO or MDC articles may be translated into a significantly different layout and their source versions are often updated with minor changes that should not invalidate the whole content. On the other hand small event oriented websites like Five Years of Firefox or Browser Choice have different update patterns than project pages like Test Pilot or Drumbeat.

In that case, trying to build this social contract between developers and localizers by wrapping some piece of text into uniquely identifiable objects called entities and using some way to sign them and match translation to source like we do with product UI doesn’t make sense. Localizers need great flexibility, some changes should be populated to localizations automatically, only some should invalidate them.

For this last case, we need very different tools, that are specific for document/web content localization and if you ever tried Verbatim or direct source HTML localization you probably noticed how far it is from an optimal solution.

Is that all?

No. I don’t think so. But those are the three that we identified and we believe we have ideas on how to address them using modern technologies. If you see flaws in this logic, make sure to share your thoughts.

Why I’m writing about this?

Well, I’m lucky enough to be part of L10n-Drivers team in Mozilla, and I happen to be involved in different ways in experiments and projects that are going to address each of those three concepts. It’s exciting to be in a position that allows me to work on that, but I know that we, l10n-drivers, will not be able to make it on our own.

We will need help from the whole Mozilla project. We will need support from people who produce content, create interfaces and who of course from those who localize, from all of you.

This will be a long process, but it gives us a chance to bring localization to the next level and for the first time ever, make computer user interfaces look natural.

In each of the following blog posts I’ll be focusing on one of the above types of localizations and will present you projects that aim at this goal.

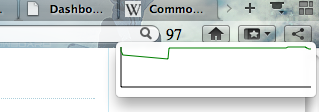

Tremendous success of the Hard Blockers Counter extension (it got over 300 daily users and 4 positive reviews – yet they were lost in the transition :() motivated me to spend another too-early-morning on adding a history plot for the counter.

Tremendous success of the Hard Blockers Counter extension (it got over 300 daily users and 4 positive reviews – yet they were lost in the transition :() motivated me to spend another too-early-morning on adding a history plot for the counter.