Between 2017 and 2018 we refactored a major component of the Gecko Platform – the intl/locale module. The main motivator was the vision of Multilingual Gecko which I documented in a blog post.

Firefox 65 brought the first major user-facing change that results from that refactor in form of Locale Selection. It’s a good time to look back at the scale of changes. This post is about the refactor of the whole module which enabled many of the changes that we were able to land in 2019 to Firefox.

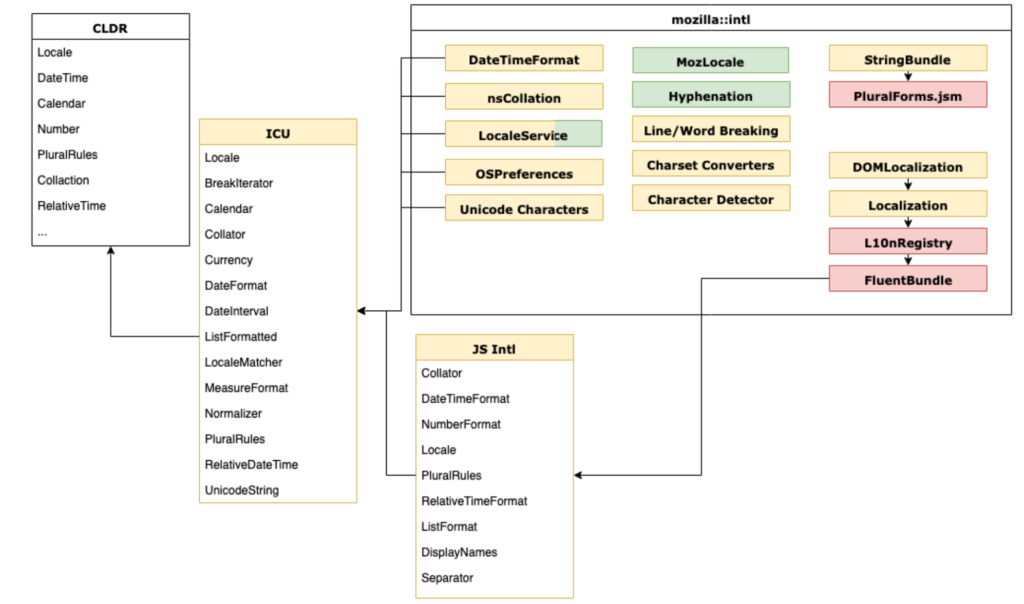

All of that work led to the following architecture:

Yellow – C++, Red – JavaScript, Green – Rust

Evolution vs Revolution

It’s very rare in software engineering that projects of scale (a Gecko module with close to 500 000 lines of code) go through such a major transition. We’ve done it a couple times, with Stylo, WebRender etc., but each case is profound, unique and rare.

There are good reasons not to touch an old code, and there are good reasons against rewriting such a large pile of code.

There’s not a lot of accrued organizational knowledge about how to handle such transitions, what common pitfalls await, and how to handle them.

That makes it even more unique to realize how smooth this change was. We started 2017 with a vision of putting a modern localization system into Firefox, and a platform that required a substantial refactor to get there.

We spent 2017 moving thousands of lines of 20 years old code around, and replacing them with a modern external library – ICU. We designed a new unified locale management and regional preferences modules, together with new internationalization wrappers finishing the year by landing Fluent into Gecko.

To give you a rough idea on the scope of changes – only 10% of ./intl/locale directory remained the same between Firefox 51 and 65!

In 2018, we started building on top of it, witnessing a reduced amount of complex work on the lower level, and much higher velocity of higher level changes with three overarching themes:

- Migrate Firefox to Fluent

- Improve Firefox locale management and multilingual use cases

- Upstream all of what we can to be part of the Web Platform

We then ended up Q1 2019 with a Fluent 1.0 release, close to 50% of DTD strings migrated to Fluent, with all major new JavaScript ECMA402 APIs based on our Gecko work and what’s even more important, with proven runtime ability to select locales from the Preferences UI.

What’s amazing to me, is that we avoided any major architectural regression in this transition. We didn’t have to revisit, remodel, revert or otherwise rethink in any substantial way any of the new APIs! All of the work, as you can see above, was put into incremental updates, deprecation of old code, standardization and integration of the new. Everything we designed to handle Firefox UI that has been proposed for standardization has been accepted, in roughly that form, by our partners from ECMA TC39 committee, and no major assumption ended up being wrong.

I believe that the reason for that success is the slow pace we took (a year of time to refactor, a year to stabilize), support from the whole organization, good test coverage and some luck.

On December 26th 2016 I filed a bug titled “Unify locale selection across components and languages“. Inside it, you can find a long list of user scenarios which we dared to tackle and which, at the time, felt completely impossible to provide.

Two years later, we had the technical means to address the majority of the scenarios listed in that bug.

Three new centralized components played a crucial role in enabling that:

LocaleService

LocaleService was the first new API. At the time, Firefox locale management was decentralized. Multiple components would try to negotiate languages for their own needs – UI chrome API, character detection, layout etc.

They would sometimes consult nsILocaleService / nsILocale which were shaped after the POSIX model and allowed to retrieve the locale based on environmental, per-OS, variables.

There was also a set of user preferences such as general.useragent.locale and intl.locale.matchOS which some of the components took into account, and others ignored.

Finally, when a locale was selected, the UI would use OS specific calls to perform internationalization operations which depended on what locale data and what intl APIs were available in the platform.

The result was that our UI could easily become inconsistent, jumping between POSIX variables, user settings, and OS settings, leading to platform-specific bugs and users stuck in a weird mid-air state with their UI being half in one locale, and half in the other.

The role of the new LocaleService was to unify that selection, provide a singleton service which will manage locale selection, negotiation and interaction with platform-specific settings.

LocaleService was written in C++ (I didn’t know Rust at the time), and quickly started replacing all hooks around the platform. It brought four major concepts:

- All APIs accepted and returned fallback lists, rather than a single locale

- All APIs manage their state through runtime negotiation

- Unify all language tags around Unicode Locale Identifier standard

- Events were fired to notify users on locale changes

The result, combined with the introduction of IPC for LocaleService, led us in the direction of cleaner system that maintains its state and can be reasoned about.

In the process, we kept extending LocaleService to provide the lists of locales that we should have in Gecko, both getters and setters, while maintaining the single source of truth and event driven model for reacting to runtime locale changes.

That allowed us to make our codebase ready for, first locale selection, and then runtime locale switching, that Fluent was about to make possible.

Today LocaleService is very stable, with just 8 bugs open, no feature changes since September 2018, up-to-date documentation and a ~93% test coverage.

OSPreferences

With the centralization of internationalization API around our custom ICU/CLDR instance, we needed a new layer to handle interactions with the host environment. This layer has been carved out of the old nsILocaleService to facilitate learning user preferences set in Windows, MacOS, Gnome and Android.

It has been a fun ride with incremental improvements to handle OS-specific customizations and feed them into LocaleService and mozIntl, but we were able to accomplish the vast majority of the goals with minimum friction and now have a sane model to reason about and extend as we need.

Today OSPreferences is very stable, with just 3 bugs open, no feature changes since September 2018, up-to-date documentation and a ~93% test coverage.

mozIntl

With LocaleService and OSPreferences in place we had all the foundation we needed to negotiate a different set of locales and customize many of the intl formatters, but we didn’t have a way to separate what we expose to the Web from what we use internally.

We needed a wrapper that would allow us to use the JS Intl API, but with some customizations and extensions that are either not yet part of the web standard, or, due to fingerprinting, cannot be exposed to the Web.

We developed `mozIntl` to close that gap. It exposes some bits that are internal only, or too early for external adoption, but ready for the internal one.

mozIntl is pretty stable now, with a few open bugs, 96% test coverage and a lot of its functionality is now in the pipeline to become part of ECMA402. In the future we hope this will make mozIntl thinner.

2019

In 2019, we were able to focus on the migration of Firefox to Fluent. That required us to resolve a couple more roadblocks, like startup performance and caching, and allowed us to start looking into the flexibility that Fluent gives to revisit our distribution channels, build system models and enable Gecko based applications to handle multilingual input.

Gecko is, as of today, a powerful software platform with a great internationalization component leading the way in advancing Web Standards and fulfilling the Mozilla mission by making the Web more accessible and multilingual. It’s a great moment to celebrate this achievement and commit to maintain that status.